The implementation maturity model (IMM) is an instrument to help an organization in assessing and determining the degree of maturity of its implementation processes.

This model consists of two important components, namely the:

- five maturity levels, adopted from capability maturity model (CMM) of the Software Engineering Institute (SEI). By assessing the maturity of different aspects of implementation processes, it becomes clear what their strengths and weaknesses are, and also where improvements are needed.

- Implementation maturity matrix, which is an adjusted version of the test maturity matrix found in the test process improvement (TPI) model developed by Sogeti. The IMM matrix allows an organization to gain insight into the current situation of its implementation processes, and how it should pursue the desirable situation (i.e. a higher maturity level).

Maturity levels of the IMM

The IMM adopts the five maturity levels from the CMM.

According to SEI (1995): "Maturity in this context implies a potential for growth in capability and indicates both the richness of an organization’s implementation process and the consistency with which it is applied in projects throughout the organization."

The five maturity levels of the IMM are:

Level 0 – Initial

At this level, the organization lacks a stable environment for the implementation. Values are given to implementation aspects and implementation factors in an ‘ad hoc’ fashion and there is no interconnection between them. Organizational processes and goals are not considered centrally during implementation projects and communication hardly takes place. The overall implementation lacks structure and control and its efficiency depends on individual skills, knowledge and motivation.

Level A – Repeatable

Activities are based on the results of previous projects and the demands of the current one. Implementation aspects are considered during implementation projects. Project standards are documented per aspect and the organization prevents any deviant behavior or actions. Previous successes can be repeated due to stabilized planning and control.

Level B – Defined

The process of implementing is documented throughout the organization rather than per aspect. Projects are carried out under guidance of project operation standards and an implementation strategy. Each project will be preceded by preparations to assure conformity with the processes and goals within the organization.

Level C – Managed

Projects are started and supervised by change management and/or process management. Implementing becomes predictable and the organization is able to develop rules and conditions regarding the quality of the products and processes (performance management). Deviating behavior will immediately be detected and corrected. The implemented IT-solutions are predictable and of high quality and the organization is willing and able to work with it. At this level, implementing has become a ‘way of life’.

Level D – Optimizing

The whole organization is focusing on the continuous improvement of the implementation processes. The organization possesses the means to detect weaknesses and to strengthen the implementation process proactively. Analyses are carried out to find the causes of error and mistakes. Each project will be evaluated after closure to prevent recurrence of known mistakes.

IMM Assessment

By executing an IMM assessment, the overall maturity for the implementation processes within an organization can be assessed and determined. In this section, this process will be further elaborated.

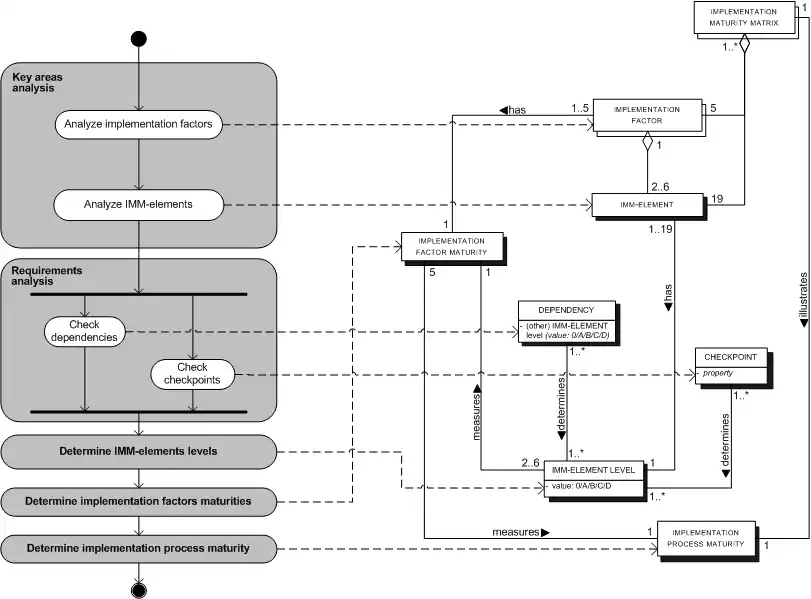

Process-data diagram

The process-data diagram below (Figure 1) depicts the process of determining the maturity level of the implementation process under guidance of the IMM. Note that it only represents the evaluation process and not the process of determining actions to improve an organization's way of implementing.

The meta-process model (see meta-process modeling) on the left-hand side of the diagram depicts the activities of the process and the transitions between them. On the right-hand side, a meta-data model depicts the concepts produced during the process. By integrating the meta-process and meta-data model, the process-data diagram reveals the relations between activities (a process) and concepts (the data produced in the process). This relationship is illustrated by each dotted arrow connecting an activity of the meta-process model with a concept of the meta-data model (see also meta-modeling).

The goal of the whole assessment process is to determine and insert relevant values into the Implementation Maturity Matrix so that the overall maturity of an organization's implementation processes can be derived from it. The relevant values needed are:

- Implementation factors,

- IMM-elements, and

- corresponding maturity levels of the two mentioned above.

These three concepts will be further elaborated in the sections below.

Implementation factors

Implementation factors are aspects that have to be taken into account when carrying out implementation projects. There are five implementation factors in the IMM, these are:

- Process

- Human resource

- Information

- Means

- Control

In the optimal situation, an implementation project will strive to create balance and alignment between the five factors. In other words, an organization should focus on all five aspects when planning and executing implementation projects.

Once the maturity of the implementation factors has been determined, the overall maturity of the implementation processes can be determined as well. But before this, it is necessary to analyze the constituent parts of each of the factors, which are also called the ‘IMM-elements’.

IMM-elements and levels

In each implementation process, certain areas (elements) need specific attention in order to achieve a well defined process. These areas, also called IMM-elements, are therefore the basis for improving and structuring the test process. The IMM has a total of 19 elements, which are grouped together under each of the above-mentioned implementation factors. Altogether, they reflect the entire implementation process (Rooimans et al., 2003, p. 198; Koomen & Pol, 1998).

Below are the five implementation factors with their corresponding elements (Table 1). These elements, however, will not be further elaborated since the scope here is only on the IMM assessment process itself.

Table 1: Implementation factors and IMM-elements.

| Process | Human resource | Information | Means | Control |

|---|---|---|---|---|

| Valuation aspects | People type | Metrics | Process tools | Estimating and planning |

| Phasing | Involvement degree | Reporting | Implementation tools | Scope of methodology |

| Implementation strategy | Acceptance degree | Management | Implementation functions | |

| Integration | Communication channels | |||

| Change management | Balance & Interrelation | |||

| Process management |

Just like the implementation factors, each element can assume levels ranging from 0 to A...D. The maximum levels that can be achieved vary per element. Thus, not all elements can achieve level D (optimizing) for example. Each element has different characteristics as it rises from a lower level to a higher level. And the higher the level, the better the element is organized, structured and integrated into the implementation process. Take for example the element ‘implementation tools’ under the factor ‘means’. These are automated tools used during implementation projects, like tools to document reports. At level A (repeatable), the tools used may vary per project, while at level B (defined), these tools are standardized for similar projects.

By looking at relevant documentation and carrying out interviews, an organization can assess the current status of the elements and hence determine on which maturity levels they are.

Dependencies and checkpoints

To determine the level of an IMM-element, there are specific dependencies to consider. Dependencies state that other IMM-elements need to have achieved certain levels before the IMM-element in focus can be classified into a specific level. For example, element 1 can only achieve level B if elements 5 and 9 have reached the levels B and C respectively. Each implementation process will result in different dependencies among IMM-element and their levels. (Rooimans et al., 2003, pp. 166–169)

In addition, each level consists of certain requirements for the elements. The requirements, also called ‘checkpoints’, are crucial to determining the level of an element. An element cannot achieve a higher level if it does not conform to the requirements of that level. Moreover, the checkpoints of a level also comprise those of lower levels. Thus, an element that has reached level B for example needs to have met the requirements of level B as well as level A.

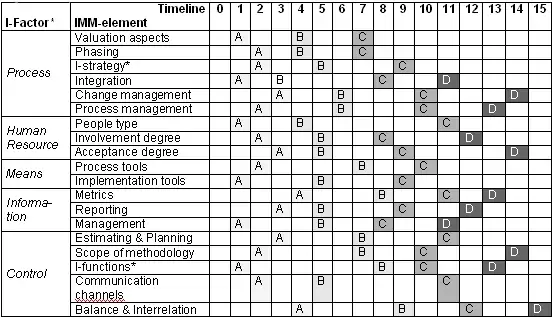

Implementation maturity matrix

After analyzing the implementation process, the dependencies and the checkpoints, all values and concepts can be inserted into an implementation maturity matrix. This matrix is constructed to show the relationships between all implementation factors, elements and levels. Besides giving a complete status overview of the implementation processes within an organization, it is also a good means for communication.

An example of an implementation maturity matrix is given below (see Figure 2). The implementation factors can be found in the first column, followed by the IMM-elements in the second one. The maturity levels belonging to the elements can be found in the cells below the timeline. This timeline does not hold any temporal meaning. It merely allows the matrix to depict empty cells between the different levels. Although these empty cells don't have particular meanings, they do illustrate the dependencies between elements and their maturity levels. Due to these dependencies and checkpoints, even if an element has almost achieved, for instance, maturity level B, it will still be assigned to level A.

*: ‘I’ is the abbreviation of ‘implementation’

The maturity level of each implementation factor can be derived from the maturities of the elements it comprises. The overall maturity level of the implementation processes can then, in its turn, be derived from the maturities of these five factors altogether.

To clarify the activities and concepts of the process-data diagram elaborated so far, two tables containing the definitions and explanations are included below. Table 2 contains the definitions of the concepts of the meta-data model (right-hand side of Figure 1) and Table 3 contains the explanations of the activities of the meta-process model (left-hand side of Figure 1) and how they are connected to the concepts of the meta-data model.

Table 2: Concept definition list of meta-data model

| Concept | Definition (source) |

|---|---|

| Implementation process | The process of preparing an organization for an organizational change and the actual implementation and embedding of that change (Rooimans et al., 2003, p. 199). |

| Implementation factor | Factors that have to be taken into account when carrying out change-projects and when the goal is to achieve balance between the key areas organization and ICT. In the best situation, a change-project will strive to create balance and alignment between the various factors. The five implementation factors are (Rooimans et al., 2003, p. 199):

|

| IMM-element | In each implementation process, certain areas (elements) need specific attention in order to achieve a well defined process. These areas, also called IMM-elements, are therefore the basis for improving and structuring the test process. The IMM has a total of 19 IMM-elements, which, grouped together, constitute each of the above-mentioned implementation factors. (Rooimans et al., 2003, p. 198; Koomen & Pol, 1998) |

| Implementation Factor Maturity | Extent to which a specific implementation factor is explicitly defined, managed, measured, controlled and effective. The maturity levels that each implementation factor can take on correspond to the maturity levels of the implementation process (see below) (Rooimans et al., 2003, pp. 155–169). |

| IMM-element Level | The five levels used to differentiate maturity levels of each IMM-element. These levels correspond to the maturity levels of the implementation process (see below) (Rooimans et al., 2003, p. 198). |

| Dependency | A certain condition that has to be met before an IMM-element can reach a specific IMM-element level. Dependencies are defined in terms of IMM-elements (other than the one in focus) and their maturity levels (Rooimans et al., 2003, pp. 155–169). |

| Checkpoint | A certain property that a specific IMM-element has to possess before they can reach a certain IMM-element level (Rooimans et al., 2003, pp. 155–169). |

| Implementation Maturity Matrix | Instrument with which the degree of maturity regarding the implementation becomes visible after all the IMM-elements and their corresponding levels are filled in (Rooimans et al., 2003, p. 198). |

| Implementation Process Maturity | Extent to which a specific implementation process is explicitly defined, managed, measured, controlled and effective. Maturity implies a potential for growth in capability and indicates both the richness of an organization's implementation process and the consistency with which it is applied in projects throughout the organization (Software Engineering Institute [SEI], 1995, p. 9). The five maturity levels that can be differentiated in the implementation process are:

|

Table 3: Activities and sub-activities in the maturity assessment process

| Activity | Sub-activity | Description |

|---|---|---|

| Key areas analysis | Analyze implementation factors | In order to insert relevant values into the implementation maturity matrix, the current implementation process and its key areas needs to be analyzed. This analysis starts with the implementation factors and the IMM-elements. Each implementation process embodies five implementation factors, which are Process, Human Resource, Means, Information and Control. (Rooimans et al., 2003, p. 160) |

| Analyze IMM-elements | Each implementation factor is subdivided into two or more IMM-elements that, altogether, represent the entire implementation process. This sub-activity ends in a total of 19 IMM-elements accompanied by information filled in for each element that reflects the current implementation processes. (Rooimans et al., 2003, pp. 160–163) | |

| Requirements analysis | Check dependencies | In order to determine the level of an IMM-element, there are specific dependencies to consider. Dependencies state that other IMM-elements have to have achieved certain levels before the IMM-element in focus can be classified into a specific level. Each implementation process will result in different dependencies among IMM-elements and their levels. (Rooimans et al., 2003, pp. 166–169) |

| Check checkpoints | In order to be classified into a specific IMM-element level, there are specific properties (called checkpoints) that the IMM-element has to possess. (Rooimans et al., 2003, pp. 166–169) | |

| Determine implementation maturity model-elements levels | After all dependencies and checkpoints have been considered, the levels of the IMM-elements can be determined. (Rooimans et al., 2003, pp. 164–169) | |

| Determine implementation factors maturities | After the levels of all IMM-elements have been determined, the maturity level of the implementation factors can be determined as well. (Rooimans et al., 2003, pp. 166–167) | |

| Determine implementation process maturity | When the implementation maturity matrix contains all the factors, elements and their values, the implementation process maturity can be derived from it. (Rooimans et al., 2003, p. 167) |

Example case study

This section contains a fictive case study, written by the author of this entry, to illustrate the application of the IMM assessment. It involves an IT-organization named ManTech, which focuses on helping companies with implementation projects. Due to the growing competition between ManTech and other similar firms, the CEO of ManTech has decided to establish a group consisting of 3 managers that will help him assess the organization's current way of implementation. In order to find out on which level they are with their implementation processes, they have decided to use the IMM to guide their assessment.

To come up with relevant values to insert into the matrix, they needed to decompose their implementation processes into the five implementation factors and nineteen IMM-elements and valuate them. By carrying out interviews with employees and project managers and investigating documents of previous projects or those in progress, they had to determine the properties of all nineteen elements that are applicable to ManTech.

From the analysis, the following things were found:

- most, if not all, projects comprised two phases: planning and execution

- there was no standardized way of writing reports after each project

- the tools used to support project activities vary per project

- developing implementation strategies involves the project itself closely

- the management of the implementation projects remain within each project and are not applicable to other projects

- the stakeholders are only involved with the implementation during the actual usage of the software implemented

- when project teams are needed, people are simple selected based on their availability and not on their specific knowledge and skills

- estimations are supported with statistical data per project

- statistical data (metrics) are calculated for individual projects for each deliverable

- communication often only takes place within the project teams

- applied methods vary per project

- implementation factors are valuated separately

- to guide the projects, formal roles are appointed to employees

- people of the organization are very reluctant to adapt to changes within the organization

Unfortunately, due to the lack of documentation, the managers were not able to find all the needed information to valuate all IMM-elements. However, the CEO decided that they should nevertheless insert the values into the matrix, which turned out like Figure 3 below. The elements that they could not valuate are grayed out.

*: ‘I’ is the abbreviation of ‘Implementation’

Just when the CEO wanted to derive the overall maturity from the matrix, one of the managers suddenly reminded him of the dependencies and checkpoints that needed to be considered. After some additional analysis, they found among others the following dependencies and checkpoints that might affect the maturity levels of the initial find (see Table 4).

Table 4: Dependencies and checkpoints – ManTech.

| Element & Level | Depends on | Requires |

|---|---|---|

| Implementation strategy (A) | Valuating aspects (A) Involvement degree (A) |

All risks have to be taken into account and the people involved have to at least accept the technical changes in the organization. |

| Communication channels (A) | People type (B) Involvement degree (A) |

Extensive communication between project team members. |

| Estimating and planning (A) | Metrics (A) Reporting (A) |

Estimations and planning are supported. |

| Estimating and planning (B) | Metrics (B) Reporting (B) |

Each project needs to be supported by statistical data and estimations. |

After taking this additional information into account, the new version of the matrix would look like in Figure 4.

Looking at this matrix, the CEO realized that improvements needed to be made if they had to compete with the other firms. The first step that they had to make towards this goal was to make sure that the grayed out elements can be and are analyzed. The second step was to improve the elements ‘implementation strategy’ and ‘communication channels’ so that they at least achieve level A. The dependencies and checkpoints they found would be able to help them decide on improvement actions.

For ‘implementation strategy’ to achieve level A, ‘valuating aspects’ needs to be analyzed first. For ‘communication channels’ to achieve level A, the way with which ManTech selects people to form project teams has to be improved first. They need to identify the specific skills and knowledge that each employee has so that the organization can at least group the right people with the right combination of skills in one team.

*: ‘I’ is the abbreviation of ‘Implementation’

Entry Concept Definitions

Definitions of the concepts written in italics in the text above are given in Table 5 below.

Table 5: Concept definition and explanation.

| Implementation Process | Because software implementation always results in a change within an organization, an implementation process is defined as the process of preparing an organization for an organizational change and the actual implementation and embedding of that change. In this context, the term implementation process represents the way implementations on overall are being realized within an organization (Rooimans, Theye & Koop, 2003). |

|---|---|

| Process-data diagram | A diagram constructed with meta-modeling to express a process. A process-data diagram consists of two integrated models. The meta-process model on the left-hand side is based on a UML activity diagram, and the meta-data model on the right-hand side is an adapted UML class diagram. Combining these two models, the process-data diagram is used to reveal the relations between activities and artifacts (Saeki, 2003). |

| Test Process improvement (TPI) | Developed by Sogeti, an IT-solutions company located in the Netherlands, the test process improvement model supports the improvement of test processes. By considering different aspects, also called key areas, of the test process (e.g. use of test tools, design techniques), the model offers insight into the strengths and weaknesses of the test process and also the maturity of the test processes within an organization. A test maturity matrix is used to communicate, evaluate and derive the maturity of test processes within an organization (Koomen & Pol, 1998). |

References

- Koomen, T., & Pol, M. (1998). Improvement of the test process using TPI

- Rooimans, R., Theye, M. de, & Koop, R. (2003). Regatta: ICT-implementaties als uitdaging voor een vier-met-stuurman. The Hague, The Netherlands: Ten Hagen en Stam Uitgevers. ISBN 90-440-0575-8.

- Saeki, M. (2003). Embedding metrics into information systems development methods: an application of method engineering technique. CaiSE 2003, 374–389.

- Software Engineering Institute [SEI], Carnegie Mellon University (1995). The capability maturity model: guidelines for improving the software process. Boston, Massachusetts, USA: Addison-Wesley. ISBN 0-201-54664-7.