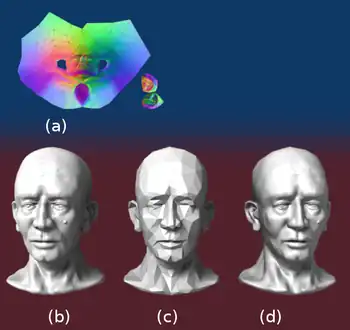

In 3D computer graphics, normal mapping, or Dot3 bump mapping, is a texture mapping technique used for faking the lighting of bumps and dents – an implementation of bump mapping. It is used to add details without using more polygons. A common use of this technique is to greatly enhance the appearance and details of a low polygon model by generating a normal map from a high polygon model or height map.

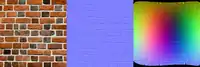

Normal maps are commonly stored as regular RGB images where the RGB components correspond to the X, Y, and Z coordinates, respectively, of the surface normal.

History

In 1978 Jim Blinn described how the normals of a surface could be perturbed to make geometrically flat faces have a detailed appearance.[1] The idea of taking geometric details from a high polygon model was introduced in "Fitting Smooth Surfaces to Dense Polygon Meshes" by Krishnamurthy and Levoy, Proc. SIGGRAPH 1996,[2] where this approach was used for creating displacement maps over nurbs. In 1998, two papers were presented with key ideas for transferring details with normal maps from high to low polygon meshes: "Appearance Preserving Simplification", by Cohen et al. SIGGRAPH 1998,[3] and "A general method for preserving attribute values on simplified meshes" by Cignoni et al. IEEE Visualization '98.[4] The former introduced the idea of storing surface normals directly in a texture, rather than displacements, though it required the low-detail model to be generated by a particular constrained simplification algorithm. The latter presented a simpler approach that decouples the high and low polygonal mesh and allows the recreation of any attributes of the high-detail model (color, texture coordinates, displacements, etc.) in a way that is not dependent on how the low-detail model was created. The combination of storing normals in a texture, with the more general creation process is still used by most currently available tools.

Spaces

The orientation of coordinate axes differs depending on the space in which the normal map was encoded. A straightforward implementation encodes normals in object space so that the red, green, and blue components correspond directly with the X, Y, and Z coordinates. In object space, the coordinate system is constant.

However, object-space normal maps cannot be easily reused on multiple models, as the orientation of the surfaces differs. Since color texture maps can be reused freely, and normal maps tend to correspond with a particular texture map, it is desirable for artists that normal maps have the same property.

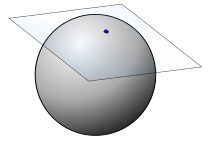

Normal map reuse is made possible by encoding maps in tangent space. The tangent space is a vector space, which is tangent to the model's surface. The coordinate system varies smoothly (based on the derivatives of position with respect to texture coordinates) across the surface.

Tangent space normal maps can be identified by their dominant purple color, corresponding to a vector facing directly out from the surface. See Calculation.

Calculating tangent space

Normals are used in computer graphics primarily for lighting. A normal is a vector indicating the direction a surface is facing. To know how a surface must be lit, the software must know how it is oriented, since how a surface is oriented greatly influences how light gets reflected off of it. Normals can be specified with a variety of coordinate systems. In computer graphics, it is useful to compute normals relative to the tangent plane of the surface. This is useful because surfaces in video games and other applications undergo a variety of transforms before they are finally rendered, therefore a coordinate system relative to how a surface is oriented is required. Skeletal animation on a finely detailed character is a concrete example of this. If a character's arm is bent, the normal maps must be able to reflect the new orientation without computationally expensive updates to texture data in the transform.

In order to find the perturbation in the normal the tangent space must be correctly calculated.[5] Most often the normal is perturbed in a fragment shader after applying the model and view matrices. Typically the geometry provides a normal and tangent. The tangent is part of the tangent plane and can be transformed simply with the linear part of the matrix (the upper 3x3). However, the normal needs to be transformed by the inverse transpose. Most applications will want bitangent to match the transformed geometry (and associated UVs). So instead of enforcing the bitangent to be perpendicular to the tangent, it is generally preferable to transform the bitangent just like the tangent. Let t be tangent, b be bitangent, n be normal, M3x3 be the linear part of model matrix, and V3x3 be the linear part of the view matrix.

Calculation

To calculate the Lambertian (diffuse) lighting of a surface, the unit vector from the shading point to the light source is dotted with the unit vector normal to that surface, and the result is the intensity of the light on that surface. Imagine a polygonal model of a sphere - you can only approximate the shape of the surface. By using a 3-channel bitmap textured across the model, more detailed normal vector information can be encoded. Each channel in the bitmap corresponds to a spatial dimension (X, Y and Z). These spatial dimensions are relative to a constant coordinate system for object-space normal maps, or to a smoothly varying coordinate system (based on the derivatives of position with respect to texture coordinates) in the case of tangent-space normal maps. This adds much more detail to the surface of a model, especially in conjunction with advanced lighting techniques.

Unit Normal vectors corresponding to the u,v texture coordinate are mapped onto normal maps. Only vectors pointing towards the viewer (z: 0 to -1 for Left Handed Orientation) are present, since the vectors on geometries pointing away from the viewer are never shown. The mapping is as follows:

X: -1 to +1 : Red: 0 to 255 Y: -1 to +1 : Green: 0 to 255 Z: 0 to -1 : Blue: 128 to 255

light green light yellow dark cyan light blue light red dark blue dark magenta

- A normal pointing directly towards the viewer (0,0,-1) is mapped to (128,128,255). Hence the parts of object directly facing the viewer are light blue. The most common color in a normal map.

- A normal pointing to top right corner of the texture (1,1,0) is mapped to (255,255,128). Hence the top-right corner of an object is usually light yellow. The brightest part of a color map.

- A normal pointing to right of the texture (1,0,0) is mapped to (255,128,128). Hence the right edge of an object is usually light red.

- A normal pointing to top of the texture (0,1,0) is mapped to (128,255,128). Hence the top edge of an object is usually light green.

- A normal pointing to left of the texture (-1,0,0) is mapped to (0,128,128). Hence the left edge of an object is usually dark cyan.

- A normal pointing to bottom of the texture (0,-1,0) is mapped to (128,0,128). Hence the bottom edge of an object is usually dark magenta.

- A normal pointing to bottom left corner of the texture (-1,-1,0) is mapped to (0,0,128). Hence the bottom-left corner of an object is usually dark blue. The darkest part of a color map.

Since a normal will be used in the dot product calculation for the diffuse lighting computation, we can see that the {0, 0, –1} would be remapped to the {128, 128, 255} values, giving that kind of sky blue color seen in normal maps (blue (z) coordinate is perspective (deepness) coordinate and RG-xy flat coordinates on screen). {0.3, 0.4, –0.866} would be remapped to the ({0.3, 0.4, –0.866}/2+{0.5, 0.5, 0.5})*255={0.15+0.5, 0.2+0.5, -0.433+0.5}*255={0.65, 0.7, 0.067}*255={166, 179, 17} values (). The sign of the z-coordinate (blue channel) must be flipped to match the normal map's normal vector with that of the eye (the viewpoint or camera) or the light vector. Since negative z values mean that the vertex is in front of the camera (rather than behind the camera) this convention guarantees that the surface shines with maximum strength precisely when the light vector and normal vector are coincident.[6]

Normal mapping in video games

Interactive normal map rendering was originally only possible on PixelFlow, a parallel rendering machine built at the University of North Carolina at Chapel Hill. It was later possible to perform normal mapping on high-end SGI workstations using multi-pass rendering and framebuffer operations[7] or on low end PC hardware with some tricks using paletted textures. However, with the advent of shaders in personal computers and game consoles, normal mapping became widely used in commercial video games starting in late 2003. Normal mapping's popularity for real-time rendering is due to its good quality to processing requirements ratio versus other methods of producing similar effects. Much of this efficiency is made possible by distance-indexed detail scaling, a technique which selectively decreases the detail of the normal map of a given texture (cf. mipmapping), meaning that more distant surfaces require less complex lighting simulation. Many authoring pipelines use high resolution models baked into low/medium resolution in-game models augmented with normal maps.

Basic normal mapping can be implemented in any hardware that supports palettized textures. The first game console to have specialized normal mapping hardware was the Sega Dreamcast. However, Microsoft's Xbox was the first console to widely use the effect in retail games. Out of the sixth generation consoles, only the PlayStation 2's GPU lacks built-in normal mapping support, though it can be simulated using the PlayStation 2 hardware's vector units. Games for the Xbox 360 and the PlayStation 3 rely heavily on normal mapping and were the first game console generation to make use of parallax mapping. The Nintendo 3DS has been shown to support normal mapping, as demonstrated by Resident Evil: Revelations and Metal Gear Solid 3: Snake Eater.

See also

References

- ↑ Blinn. Simulation of Wrinkled Surfaces, Siggraph 1978

- ↑ Krishnamurthy and Levoy, Fitting Smooth Surfaces to Dense Polygon Meshes, SIGGRAPH 1996

- ↑ Cohen et al., Appearance-Preserving Simplification, SIGGRAPH 1998 (PDF)

- ↑ Cignoni et al., A general method for preserving attribute values on simplified meshes, IEEE Visualization 1998 (PDF)

- ↑ Mikkelsen, Simulation of Wrinkled Surfaces Revisited, 2008 (PDF)

- ↑ "LearnOpenGL - Normal Mapping". learnopengl.com. Retrieved 2021-10-19.

- ↑ Heidrich and Seidel, Realistic, Hardware-accelerated Shading and Lighting Archived 2005-01-29 at the Wayback Machine, SIGGRAPH 1999 (PDF)

External links

- Normal Map Tutorial Per-pixel logic behind Dot3 Normal Mapping

- NormalMap-Online Free Generator inside Browser

- Normal Mapping on sunandblackcat.com

- Blender Normal Mapping

- Normal Mapping with paletted textures using old OpenGL extensions.

- Normal Map Photography Creating normal maps manually by layering digital photographs

- Normal Mapping Explained

- Simple Normal Mapper Open Source normal map generator