A 100-year flood is a flood event that has on average a 1 in 100 chance (1% probability) of being equaled or exceeded in any given year.[1]

The 100-year flood is also referred to as the 1% flood.[2] For coastal or lake flooding, the 100-year flood is generally expressed as a flood elevation or depth, and may include wave effects. For river systems, the 100-year flood is generally expressed as a flowrate. Based on the expected 100-year flood flow rate, the flood water level can be mapped as an area of inundation. The resulting floodplain map is referred to as the 100-year floodplain. Estimates of the 100-year flood flowrate and other streamflow statistics for any stream in the United States are available.[3] In the UK, the Environment Agency publishes a comprehensive map of all areas at risk of a 1 in 100 year flood.[4] Areas near the coast of an ocean or large lake also can be flooded by combinations of tide, storm surge, and waves.[5] Maps of the riverine or coastal 100-year floodplain may figure importantly in building permits, environmental regulations, and flood insurance. These analyses generally represent 20th-century climate.

Probability

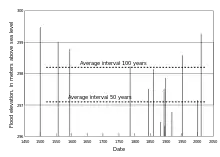

A common misunderstanding is that a 100-year flood is likely to occur only once in a 100-year period. In fact, there is approximately a 63.4% chance of one or more 100-year floods occurring in any 100-year period. On the Danube River at Passau, Germany, the actual intervals between 100-year floods during 1501 to 2013 ranged from 37 to 192 years.[6] The probability Pe that one or more floods occurring during any period will exceed a given flood threshold can be expressed, using the binomial distribution, as

where T is the threshold return period (e.g. 100-yr, 50-yr, 25-yr, and so forth), and n is the number of years in the period. The probability of exceedance Pe is also described as the natural, inherent, or hydrologic risk of failure.[7][8] However, the expected value of the number of 100-year floods occurring in any 100-year period is 1.

Ten-year floods have a 10% chance of occurring in any given year (Pe =0.10); 500-year have a 0.2% chance of occurring in any given year (Pe =0.002); etc. The percent chance of an X-year flood occurring in a single year is 100/X. A similar analysis is commonly applied to coastal flooding or rainfall data. The recurrence interval of a storm is rarely identical to that of an associated riverine flood, because of rainfall timing and location variations among different drainage basins.

The field of extreme value theory was created to model rare events such as 100-year floods for the purposes of civil engineering. This theory is most commonly applied to the maximum or minimum observed stream flows of a given river. In desert areas where there are only ephemeral washes, this method is applied to the maximum observed rainfall over a given period of time (24-hours, 6-hours, or 3-hours). The extreme value analysis only considers the most extreme event observed in a given year. So, between the large spring runoff and a heavy summer rain storm, whichever resulted in more runoff would be considered the extreme event, while the smaller event would be ignored in the analysis (even though both may have been capable of causing terrible flooding in their own right).

Statistical assumptions

There are a number of assumptions that are made to complete the analysis that determines the 100-year flood. First, the extreme events observed in each year must be independent from year to year. In other words, the maximum river flow rate from 1984 cannot be found to be significantly correlated with the observed flow rate in 1985, which cannot be correlated with 1986, and so forth. The second assumption is that the observed extreme events must come from the same probability density function. The third assumption is that the probability distribution relates to the largest storm (rainfall or river flow rate measurement) that occurs in any one year. The fourth assumption is that the probability distribution function is stationary, meaning that the mean (average), standard deviation and maximum and minimum values are not increasing or decreasing over time. This concept is referred to as stationarity.[8][9]

The first assumption is often but not always valid and should be tested on a case-by-case basis. The second assumption is often valid if the extreme events are observed under similar climate conditions. For example, if the extreme events on record all come from late summer thunderstorms (as is the case in the southwest U.S.), or from snow pack melting (as is the case in north-central U.S.), then this assumption should be valid. If, however, there are some extreme events taken from thunder storms, others from snow pack melting, and others from hurricanes, then this assumption is most likely not valid. The third assumption is only a problem when trying to forecast a low, but maximum flow event (for example, an event smaller than a 2-year flood). Since this is not typically a goal in extreme analysis, or in civil engineering design, then the situation rarely presents itself.

The final assumption about stationarity is difficult to test from data for a single site because of the large uncertainties in even the longest flood records[6] (see next section). More broadly, substantial evidence of climate change strongly suggests that the probability distribution is also changing[10] and that managing flood risks in the future will become even more difficult.[11] The simplest implication of this is that most of the historical data represent 20th-century climate and might not be valid for extreme event analysis in the 21st century.

Probability uncertainty

When these assumptions are violated, there is an unknown amount of uncertainty introduced into the reported value of what the 100-year flood means in terms of rainfall intensity, or flood depth. When all of the inputs are known, the uncertainty can be measured in the form of a confidence interval. For example, one might say there is a 95% chance that the 100-year flood is greater than X, but less than Y.[2]

Direct statistical analysis[9][12] to estimate the 100-year riverine flood is possible only at the relatively few locations where an annual series of maximum instantaneous flood discharges has been recorded. In the United States as of 2014, taxpayers have supported such records for at least 60 years at fewer than 2,600 locations, for at least 90 years at fewer than 500, and for at least 120 years at only 11.[13] For comparison, the total area of the nation is about 3,800,000 square miles (9,800,000 km2), so there are perhaps 3,000 stream reaches that drain watersheds of 1,000 square miles (2,600 km2) and 300,000 reaches that drain 10 square miles (26 km2). In urban areas, 100-year flood estimates are needed for watersheds as small as 1 square mile (2.6 km2). For reaches without sufficient data for direct analysis, 100-year flood estimates are derived from indirect statistical analysis of flood records at other locations in a hydrologically similar region or from other hydrologic models. Similarly for coastal floods, tide gauge data exist for only about 1,450 sites worldwide, of which only about 950 added information to the global data center between January 2010 and March 2016.[14]

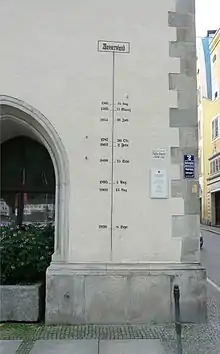

Much longer records of flood elevations exist at a few locations around the world, such as the Danube River at Passau, Germany, but they must be evaluated carefully for accuracy and completeness before any statistical interpretation.

For an individual stream reach, the uncertainties in any analysis can be large, so 100-year flood estimates have large individual uncertainties for most stream reaches.[6]: 24 For the largest recorded flood at any specific location, or any potentially larger event, the recurrence interval always is poorly known.[6]: 20, 24 Spatial variability adds more uncertainty, because a flood peak observed at different locations on the same stream during the same event commonly represents a different recurrence interval at each location.[6]: 20 If an extreme storm drops enough rain on one branch of a river to cause a 100-year flood, but no rain falls over another branch, the flood wave downstream from their junction might have a recurrence interval of only 10 years. Conversely, a storm that produces a 25-year flood simultaneously in each branch might form a 100-year flood downstream. During a time of flooding, news accounts necessarily simplify the story by reporting the greatest damage and largest recurrence interval estimated at any location. The public can easily and incorrectly conclude that the recurrence interval applies to all stream reaches in the flood area.[6]: 7, 24

Observed intervals between floods

Peak elevations of 14 floods as early as 1501 on the Danube River at Passau, Germany, reveal great variability in the actual intervals between floods.[6]: 16–19 Flood events greater than the 50-year flood occurred at intervals of 4 to 192 years since 1501, and the 50-year flood of 2002 was followed only 11 years later by a 500-year flood. Only half of the intervals between 50- and 100-year floods were within 50 percent of the nominal average interval. Similarly, the intervals between 5-year floods during 1955 to 2007 ranged from 5 months to 16 years, and only half were within 2.5 to 7.5 years.

Regulatory use

In the United States, the 100-year flood provides the risk basis for flood insurance rates. Complete information on the National Flood Insurance Program (NFIP) is available here. A regulatory flood or base flood is routinely established for river reaches through a science-based rule-making process targeted to a 100-year flood at the historical average recurrence interval. In addition to historical flood data, the process accounts for previously established regulatory values, the effects of flood-control reservoirs, and changes in land use in the watershed. Coastal flood hazards have been mapped by a similar approach that includes the relevant physical processes. Most areas where serious floods can occur in the United States have been mapped consistently in this manner. On average nationwide, those 100-year flood estimates are well sufficient for the purposes of the NFIP and offer reasonable estimates of future flood risk, if the future is like the past.[6]: 24 Approximately 3% of the U.S. population lives in areas subject to the 1% annual chance coastal flood hazard.[15]

In theory, removing homes and businesses from areas that flood repeatedly can protect people and reduce insurance losses, but in practice it is difficult for people to retreat from established neighborhoods.[16]

See also

References

- ↑ Viessman, Warren (1977). Introduction to Hydrology. Harper & Row, Publishers, Inc. p. 160. ISBN 0-7002-2497-1.

- 1 2 Holmes, R.R., Jr., and Dinicola, K. (2010) 100-Year flood–it's all about chance U.S. Geological Survey General Information Product 106

- ↑ Ries, K.G., and others (2008) StreamStats: A water resources web application U.S. Geological Survey, Fact Sheet 2008-3067 Application home page URL accessed 2015-07-12.

- ↑ "Flood Map for Planning (Rivers and Sea)". Environment Agency. 2016. Archived from the original on 2016-09-16. Retrieved 25 August 2016.

- ↑ "Coastal Flooding". FloodSmart. National Flood Insurance Program. Archived from the original on 2016-03-08. Retrieved 7 March 2016.

- 1 2 3 4 5 6 7 8 Eychaner, J.H. (2015) Lessons from a 500-year record of flood elevations Association of State Floodplain Managers, Technical Report 7 URL accessed 2021-11-20.

- ↑ Mays, L.W (2005) Water Resources Engineering, chapter 10, Probability, risk, and uncertainty analysis for hydrologic and hydraulic design Hoboken: J. Wiley & Sons

- 1 2 Maidment, D.R. ed.(1993) Handbook of Hydrology, chapter 18, Frequency analysis of extreme events New York: McGraw-Hill

- 1 2 England, John; and seven others (29 March 2018). "Guidelines for determining flood flow frequency — Bulletin 17C". Guidelines for determining flood flow frequency—Bulletin 17C. Techniques and Methods. U.S. Geological Survey. doi:10.3133/tm4B5. S2CID 134656108. Retrieved 2 October 2018.

- ↑ Milly, P. C. D.; Betancourt, J.; Falkenmark, M.; Hirsch, R. M.; Kundzewicz, Z. W.; Lettenmaier, D. P.; Stouffer, R. J. (2008-02-01). "Stationarity is Dead". Science Magazine. Sciencemag.org. 319 (5863): 573–574. doi:10.1126/science.1151915. PMID 18239110. S2CID 206509974.

- ↑ Intergovernmental Panel on Climate Change (2012) Managing the risks of extreme events and disasters to advance climate change adaptation, Summary for policymakers Archived 2015-07-19 at the Wayback Machine Cambridge and New York: Cambridge University Press, 19 p.

- ↑ "Bulletin 17C". Advisory Committee on Water Information. Retrieved 2 October 2018.

- ↑ National Water Information System database U.S. Geological Survey. URL accessed 2014-01-30.

- ↑ "Obtaining Tide Gauge Data". Permanent Service for Mean Sea Level. PSMSL. Retrieved 7 March 2016.

- ↑ Crowell, Mark; others (2010). "An estimate of the U.S. population living in 100-year coastal flood hazard areas" (PDF). Journal of Coastal Research. 26 (2): 201–211. doi:10.2112/JCOASTRES-D-09-00076.1. S2CID 9381124. Archived from the original (PDF) on 17 October 2016. Retrieved 6 March 2016.

- ↑ Schwartz, Jen (1 August 2018). "Surrendering to rising seas". Scientific American. 319 (2): 44–55. doi:10.1038/scientificamerican0818-44. PMID 30020899. S2CID 240396828. Retrieved 2 October 2018.

External links

- "What is a 100 year flood?". Boulder Area Sustainability Information Network (BASIN). URL accessed 2006-06-16.

- "Flood Extreme Anaysis". GeoTide Extreme Analysis Software. URL accessed 2023-11-28.