What started as purely linguistic research ... has led, through involvement in political causes and an identification with an older philosophic tradition, to no less than an attempt to formulate an overall theory of man. The roots of this are manifest in the linguistic theory ... The discovery of cognitive structures common to the human race but only to humans (species specific), leads quite easily to thinking of unalienable human attributes.

—Edward Marcotte on the significance of Chomsky's linguistic theory[1]

The basis of Noam Chomsky's linguistic theory lies in biolinguistics, the linguistic school that holds that the principles underpinning the structure of language are biologically preset in the human mind and hence genetically inherited.[2] He argues that all humans share the same underlying linguistic structure, irrespective of sociocultural differences.[3] In adopting this position Chomsky rejects the radical behaviorist psychology of B. F. Skinner, who viewed speech, thought, and all behavior as a completely learned product of the interactions between organisms and their environments. Accordingly, Chomsky argues that language is a unique evolutionary development of the human species and distinguished from modes of communication used by any other animal species.[4][5] Chomsky's nativist, internalist view of language is consistent with the philosophical school of "rationalism" and contrasts with the anti-nativist, externalist view of language consistent with the philosophical school of "empiricism",[6] which contends that all knowledge, including language, comes from external stimuli.[1]

Universal grammar

Since the 1960s, Chomsky has maintained that syntactic knowledge is at least partially inborn, implying that children need only learn certain language-specific features of their native languages. He bases his argument on observations about human language acquisition and describes a "poverty of the stimulus": an enormous gap between the linguistic stimuli to which children are exposed and the rich linguistic competence they attain. For example, although children are exposed to only a very small and finite subset of the allowable syntactic variants within their first language, they somehow acquire the highly organized and systematic ability to understand and produce an infinite number of sentences, including ones that have never before been uttered, in that language.[7] To explain this, Chomsky reasoned that the primary linguistic data must be supplemented by an innate linguistic capacity. Furthermore, while a human baby and a kitten are both capable of inductive reasoning, if they are exposed to exactly the same linguistic data, the human will always acquire the ability to understand and produce language, while the kitten will never acquire either ability. Chomsky referred to this difference in capacity as the language acquisition device, and suggested that linguists needed to determine both what that device is and what constraints it imposes on the range of possible human languages. The universal features that result from these constraints would constitute "universal grammar".[8][9][10] Multiple scholars have challenged universal grammar on the grounds of the evolutionary infeasibility of its genetic basis for language,[11] the lack of universal characteristics between languages,[12] and the unproven link between innate/universal structures and the structures of specific languages.[13] Scholar Michael Tomasello has challenged Chomsky's theory of innate syntactic knowledge as based on theory and not behavioral observation.[14]

Although it was influential from 1960s through 1990s, Chomsky's nativist theory was ultimately rejected by the mainstream child language acquisition research community owing to its inconsistency with research evidence.[15][16] It was also argued by linguists including Geoffrey Sampson, Geoffrey K. Pullum and Barbara Scholz that Chomsky's linguistic evidence for it had been false.[17]

Transformational-generative grammar

Transformational-generative grammar is a broad theory used to model, encode, and deduce a native speaker's linguistic capabilities.[18] These models, or "formal grammars", show the abstract structures of a specific language as they may relate to structures in other languages.[19] Chomsky developed transformational grammar in the mid-1950s, whereupon it became the dominant syntactic theory in linguistics for two decades.[18] "Transformations" refers to syntactic relationships within language, e.g., being able to infer that the subject between two sentences is the same person.[20] Chomsky's theory posits that language consists of both deep structures and surface structures: Outward-facing surface structures relate phonetic rules into sound, while inward-facing deep structures relate words and conceptual meaning. Transformational-generative grammar uses mathematical notation to express the rules that govern the connection between meaning and sound (deep and surface structures, respectively). By this theory, linguistic principles can mathematically generate potential sentence structures in a language.[1]

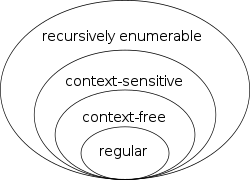

Chomsky is commonly credited with inventing transformational-generative grammar, but his original contribution was considered modest when he first published his theory. In his 1955 dissertation and his 1957 textbook Syntactic Structures, he presented recent developments in the analysis formulated by Zellig Harris, who was Chomsky's PhD supervisor, and by Charles F. Hockett.[lower-alpha 1] Their method is derived from the work of the Danish structural linguist Louis Hjelmslev, who introduced algorithmic grammar to general linguistics.[lower-alpha 2] Based on this rule-based notation of grammars, Chomsky grouped logically possible phrase-structure grammar types into a series of four nested subsets and increasingly complex types, together known as the Chomsky hierarchy. This classification remains relevant to formal language theory[21] and theoretical computer science, especially programming language theory,[22] compiler construction, and automata theory.[23]

Transformational grammar was the dominant research paradigm through the mid-1970s. The derivative[18] government and binding theory replaced it and remained influential through the early 1990s, [18] when linguists turned to a "minimalist" approach to grammar. This research focused on the principles and parameters framework, which explained children's ability to learn any language by filling open parameters (a set of universal grammar principles) that adapt as the child encounters linguistic data.[24] The minimalist program, initiated by Chomsky,[25] asks which minimal principles and parameters theory fits most elegantly, naturally, and simply.[24] In an attempt to simplify language into a system that relates meaning and sound using the minimum possible faculties, Chomsky dispenses with concepts such as "deep structure" and "surface structure" and instead emphasizes the plasticity of the brain's neural circuits, with which come an infinite number of concepts, or "logical forms".[5] When exposed to linguistic data, a hearer-speaker's brain proceeds to associate sound and meaning, and the rules of grammar we observe are in fact only the consequences, or side effects, of the way language works. Thus, while much of Chomsky's prior research focused on the rules of language, he now focuses on the mechanisms the brain uses to generate these rules and regulate speech.[5][26]

Selected bibliography

Linguistics

- Syntactic Structures (1957)

- Current Issues in Linguistic Theory (1964)

- Aspects of the Theory of Syntax (1965)

- Cartesian Linguistics (1965)

- Language and Mind (1968)

- The Sound Pattern of English with Morris Halle (1968)

- Reflections on Language (1975)

- Lectures on Government and Binding (1981)

- The Minimalist Program (1995)

Notes

- ↑

- Smith 2004, pp. 107 "Chomsky's early work was renowned for its mathematical rigor and he made some contribution to the nascent discipline of mathematical linguistics, in particular the analysis of (formal) languages in terms of what is now known as the Chomsky hierarchy."

- Koerner 1983, pp. 159: "Characteristically, Harris proposes a transfer of sentences from English to Modern Hebrew ... Chomsky's approach to syntax in Syntactic Structures and several years thereafter was not much different from Harris's approach, since the concept of 'deep' or 'underlying structure' had not yet been introduced. The main difference between Harris (1954) and Chomsky (1957) appears to be that the latter is dealing with transfers within one single language only"

- ↑

- Koerner 1978, pp. 41f: "it is worth noting that Chomsky cites Hjelmslev's Prolegomena, which had been translated into English in 1953, since the authors' theoretical argument, derived largely from logic and mathematics, exhibits noticeable similarities."

- Seuren 1998, pp. 166: "Both Hjelmslev and Harris were inspired by the mathematical notion of an algorithm as a purely formal production system for a set of strings of symbols. ... it is probably accurate to say that Hjelmslev was the first to try and apply it to the generation of strings of symbols in natural language"

- Hjelmslev 1969 Prolegomena to a Theory of Language. Danish original 1943; first English translation 1954.

References

- 1 2 3 Baughman et al. 2006.

- ↑ Lyons 1978, p. 4; McGilvray 2014, pp. 2–3.

- ↑ Lyons 1978, p. 7.

- ↑ Lyons 1978, p. 6; McGilvray 2014, pp. 2–3.

- 1 2 3 Brain From Top To Bottom.

- ↑ McGilvray 2014, p. 11.

- ↑ Dovey 2015.

- ↑ Chomsky.

- ↑ Thornbury 2006, p. 234.

- ↑ O'Grady 2015.

- ↑ Christiansen & Chater 2010, p. 489; Ruiter & Levinson 2010, p. 518.

- ↑ Evans & Levinson 2009, p. 429; Tomasello 2009, p. 470.

- ↑ Tomasello 2003, p. 284.

- ↑ Tomasello 1995, p. 131.

- ↑ Fernald & Marchman 2006, pp. 1027–1071.

- ↑ de Bot 2015, pp. 57–61.

- ↑ Pullum & Scholz 2002, pp. 9–50.

- 1 2 3 4 Harlow 2010, p. 752.

- ↑ Harlow 2010, pp. 752–753.

- ↑ Harlow 2010, p. 753.

- ↑ Butterfield, Ngondi & Kerr 2016.

- ↑ Knuth 2002.

- ↑ Davis, Weyuker & Sigal 1994, p. 327.

- 1 2 Hornstein 2003.

- ↑ Szabó 2010.

- ↑ Fox 1998.

Works cited

- Baughman, Judith S.; Bondi, Victor; Layman, Richard; McConnell, Tandy; Tompkins, Vincent, eds. (2006). "Noam Chomsky". American Decades. Detroit, MI: Gale. Archived from the original on February 14, 2022.

- "Tool Module: Chomsky's Universal Grammar". The Brain From Top To Bottom. McGill University. Archived from the original on September 10, 2017. Retrieved December 24, 2015.

- Butterfield, Andrew; Ngondi, Gerard Ekembe; Kerr, Anne, eds. (2016). "Chomsky hierarchy". A Dictionary of Computer Science. Oxford University Press. ISBN 978-0-19-968897-5.

- Chomsky, Noam. "The 'Chomskyan Era' (excerpted from The Architecture of Language)". Chomsky.info. Archived from the original on September 23, 2015. Retrieved January 3, 2017.

- Christiansen, Morten H.; Chater, Nick (October 2010). "Language as shaped by the brain". Behavioral and Brain Sciences. 31 (5): 489–509. doi:10.1017/S0140525X08004998. ISSN 1469-1825. PMID 18826669.

- Davis, Martin; Weyuker, Elaine J.; Sigal, Ron (1994). Computability, complexity, and languages: fundamentals of theoretical computer science (2nd ed.). Boston: Academic Press, Harcourt, Brace. p. 327. ISBN 978-0-12-206382-4.

- de Bot, Kees (2015). A History of Applied Linguistics: From 1980 to the Present. Routledge. ISBN 978-113882065-4.

- Dovey, Dana (December 7, 2015). "Noam Chomsky's Theory Of Universal Grammar Is Right; It's Hardwired Into Our Brains". Medical Daily. Archived from the original on November 12, 2021. Retrieved August 4, 2017.

- Evans, Nicholas; Levinson, Stephen C. (October 2009). "The myth of language universals: Language diversity and its importance for cognitive science". Behavioral and Brain Sciences. 32 (5): 429–448. doi:10.1017/S0140525X0999094X. hdl:11858/00-001M-0000-0012-C29E-4. ISSN 1469-1825. PMID 19857320.

- Fernald, Anne; Marchman, Virginia A. (2006). "Language learning in infancy". In Traxler, Matthew; Gernsbacher, Morton Ann (eds.). Handbook of Psycholinguistics. Academic Press. pp. 1027–1071. ISBN 978-008046641-5.

- Fox, Margalit (December 5, 1998). "A Changed Noam Chomsky Simplifies". The New York Times. ISSN 0362-4331. Archived from the original on April 27, 2021. Retrieved February 22, 2016.

- Harlow, S. J. (2010). "Transformational Grammar: Evolution". In Barber, Alex; Stainton, Robert J. (eds.). Concise Encyclopedia of Philosophy of Language and Linguistics. Elsevier. pp. 752–770. ISBN 978-0-08-096501-7.

- Hjelmslev, Louis (1969) [First published 1943]. Prolegomena to a Theory of Language. University of Wisconsin Press. ISBN 0299024709.

- Hornstein, Norbert (2003). "Minimalist Program". International Encyclopedia of Linguistics. Oxford University Press. ISBN 978-0-19-513977-8.

- Knuth, Donald (2002). "Preface". Selected Papers on Computer Languages. Center for the Study of Language and Information. ISBN 978-1-57586-381-8.

- Koerner, E. F. K. (1978). "Towards a historiography of linguistics". Toward a Historiography of Linguistics: Selected Essays. John Benjamins. pp. 21–54.

- Koerner, E. F. K. (1983). "The Chomskyan 'revolution' and its historiography: a few critical remarks". Language & Communication. 3 (2): 147–169. doi:10.1016/0271-5309(83)90012-5.

- Lyons, John (1978). Noam Chomsky (revised ed.). Harmondsworth: Penguin. ISBN 978-0-14-004370-9.

- McGilvray, James (2014). Chomsky: Language, Mind, Politics (2nd ed.). Cambridge: Polity. ISBN 978-0-7456-4989-4.

- O'Grady, Cathleen (June 8, 2015). "MIT claims to have found a "language universal" that ties all languages together". Ars Technica. doi:10.1073/pnas.1502134112. Archived from the original on December 15, 2021. Retrieved June 14, 2017.

- Pullum, Geoffrey; Scholz, Barbara (2002). "Empirical assessment of stimulus poverty arguments" (PDF). The Linguistic Review. 18 (1–2): 9–50. doi:10.1515/tlir.19.1-2.9. S2CID 143735248. Archived (PDF) from the original on February 3, 2021.

- Ruiter, J. P. de; Levinson, Stephen C. (October 2010). "A biological infrastructure for communication underlies the cultural evolution of languages". Behavioral and Brain Sciences. 31 (5): 518. doi:10.1017/S0140525X08005086. hdl:11858/00-001M-0000-0013-1FE2-5. ISSN 1469-1825.

- Seuren, Pieter A. M. (1998). Western linguistics: An historical introduction. Wiley-Blackwell. ISBN 0-631-20891-7.

- Smith, Neil (2004). Chomsky: Ideas and Ideals. Cambridge University Press. ISBN 978-0521546881.

- Szabó, Zoltán Gendler (2010). "Chomsky, Noam Avram (1928–)". In Shook, John R. (ed.). The Dictionary of Modern American Philosophers. Continuum. ISBN 978-0-19-975466-3.

- Thornbury, Scott (2006). An A–Z of ELT (Methodology). Oxford: Macmillan Education. p. 234. ISBN 978-1405070638.

- Tomasello, Michael (January 1995). "Language is not an instinct". Cognitive Development. 10 (1): 131–156. doi:10.1016/0885-2014(95)90021-7. ISSN 0885-2014.

- Tomasello, Michael (2003). Constructing a Language: A Usage-Based Theory of Language Acquisition. Cambridge, MA: Harvard University Press. ISBN 978-0-674-01030-7.

- Tomasello, Michael (October 2009). "Universal grammar is dead". Behavioral and Brain Sciences. 32 (5): 470–471. doi:10.1017/S0140525X09990744. ISSN 1469-1825. S2CID 144188188.