Speech science refers to the study of production, transmission and perception of speech. Speech science involves anatomy, in particular the anatomy of the oro-facial region and neuroanatomy, physiology, and acoustics.

Speech production

The production of speech is a highly complex motor task that involves approximately 100 orofacial, laryngeal, pharyngeal, and respiratory muscles.[2][3] Precise and expeditious timing of these muscles is essential for the production of temporally complex speech sounds, which are characterized by transitions as short as 10 ms between frequency bands[4] and an average speaking rate of approximately 15 sounds per second. Speech production requires airflow from the lungs (respiration) to be phonated through the vocal folds of the larynx (phonation) and resonated in the vocal cavities shaped by the jaw, soft palate, lips, tongue and other articulators (articulation).

Respiration

Respiration is the physical process of gas exchange between an organism and its environment involving four steps (ventilation, distribution, perfusion and diffusion) and two processes (inspiration and expiration). Respiration can be described as the mechanical process of air flowing into and out of the lungs on the principle of Boyle's law, stating that, as the volume of a container increases, the air pressure will decrease. This relatively negative pressure will cause air to enter the container until the pressure is equalized. During inspiration of air, the diaphragm contracts and the lungs expand drawn by pleurae through surface tension and negative pressure. When the lungs expand, air pressure becomes negative compared to atmospheric pressure and air will flow from the area of higher pressure to fill the lungs. Forced inspiration for speech uses accessory muscles to elevate the rib cage and enlarge the thoracic cavity in the vertical and lateral dimensions. During forced expiration for speech, muscles of the trunk and abdomen reduce the size of the thoracic cavity by compressing the abdomen or pulling the rib cage down forcing air out of the lungs.

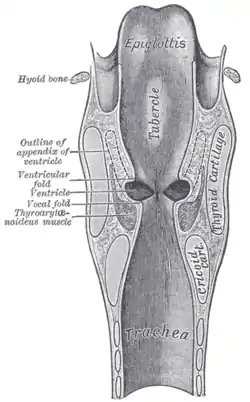

Phonation

Phonation is the production of a periodic sound wave by vibration of the vocal folds. Airflow from the lungs, as well as laryngeal muscle contraction, causes movement of the vocal folds. It is the properties of tension and elasticity that allow the vocal folds to be stretched, bunched, brought together and separated. During prephonation, the vocal folds move from the abducted to adducted position. Subglottal pressure builds and air flow forces the folds apart, inferiorly to superiorly. If the volume of airflow is constant, the velocity of the flow will increase at the area of constriction and cause a decrease in pressure below once distributed. This negative pressure will pull the initially blow open folds back together again. The cycle repeats until the vocal folds are abducted to inhibit phonation or to take a breath.

Articulation

In a third process of speech production, articulation, mobile and immobile structures of the face (articulators) adjust the shape of the mouth, pharynx and nasal cavities (vocal tract) as the vocal fold vibration sound passes through producing varying resonant frequencies.

Central nervous control

The analysis of brain lesions and the correlation between lesion locations and behavioral deficits were the most important sources of knowledge about the cerebral mechanisms underlying speech production for many years.[5][6] The seminal lesion studies of Paul Broca indicated that the production of speech relies on the functional integrity of the left inferior frontal gyrus.[7]

More recently, the results of noninvasive neuroimaging techniques, such as functional magnetic resonance imaging (fMRI), provide growing evidence that complex human skills are not primarily located in highly specialized brain areas (e.g., Broca's area) but are organized in networks connecting several different areas of both hemispheres instead. Functional neuroimaging identified a complex neural network underlying speech production including cortical and subcortical areas, such as the supplementary motor area, cingulate motor areas, primary motor cortex, basal ganglia, and cerebellum.[8][9]

Speech perception

Speech perception refers to the understanding of speech. The beginning of the process towards understanding speech is first hearing the message that is spoken. The auditory system receives sound signals starting at the outer ear. They enter the pinna and continue into the external auditory canal (ear canal) and then to the eardrum. Once in the middle ear, which consists of the malleus, the incus, and the stapes; the sounds are changed into mechanical energy. After being converted into mechanical energy, the message reaches the oval window, which is the beginning of the inner ear. Once inside the inner ear, the message is transferred into hydraulic energy by going through the cochlea, which is filled with fluid, and on to the Organ of Corti. This organ again helps the sound to be transferred into a neural impulse that stimulates the auditory pathway and reaches the brain. Sound is then processed in Heschl's gyrus and associated with meaning in Wernicke's area. As for theories of speech perception, there are a motor and an auditory theory. The motor theory is based upon the premise that speech sounds are encoded in the acoustic signal rather than enciphered in it. The auditory theory puts greater emphasis on the sensory and filtering mechanisms of the listener and suggests that speech knowledge is a minor role that's only used in hard perceptual conditions.

Transmission of speech

Speech is transmitted through sound waves, which follow the basic principles of acoustics. The source of all sound is vibration. For sound to exist, a source (something put into vibration) and a medium (something to transmit the vibrations) are necessary.

Since sound waves are produced by a vibrating body, the vibrating object moves in one direction and compresses the air directly in front of it. As the vibrating object moves in the opposite direction, the pressure on the air is lessened so that an expansion, or rarefaction, of air molecules occurs. One compression and one rarefaction make up one longitudinal wave. The vibrating air molecules move back and forth parallel to the direction of motion of the wave, receiving energy from adjacent molecules nearer the source and passing the energy to adjacent molecules farther from the source. Sound waves have two general characteristics: A disturbance is in some identifiable medium in which energy is transmitted from place to place, but the medium does not travel between two places.

Important basic characteristics of waves are wavelength, amplitude, period, and frequency. Wavelength is the length of the repeating wave shape. Amplitude is the maximum displacement of the particles of the medium, which is determined by the energy of the wave. A period (measured in seconds) is the time for one wave to pass a given point. Frequency of the wave is the number of waves passing a given point in a unit of time. Frequency is measured in hertz (hz); (Hz cycles per second) and is perceived as pitch. Each complete vibration of a sound wave is called a cycle. Two other physical properties of sound are intensity and duration. Intensity is measured in decibels (dB) and is perceived as loudness.

There are two types of tones: pure tones and complex tones. The musical note produced by a tuning fork is called a pure tone because it consists of one tone sounding at just one frequency. Instruments get their specific sounds — their timbre — because their sound comes from many different tones all sounding together at different frequencies. A single note played on a piano, for example, actually consists of several tones all sounding together at slightly different frequencies.

See also

References

- 1 2 3 Gray's Anatomy of the Human Body, 20th ed. 1918.

- ↑ Simonyan K, Horwitz B (April 2011). "Laryngeal motor cortex and control of speech in humans". Neuroscientist. 17 (2): 197–208. doi:10.1177/1073858410386727. PMC 3077440. PMID 21362688.

- ↑ Levelt, Willem J. M. (1989). Speaking : from intention to articulation. Cambridge, Mass.: MIT Press. ISBN 978-0-262-12137-8. OCLC 18136175.

- ↑ Fitch RH, Miller S, Tallal P (1997). "Neurobiology of speech perception". Annu. Rev. Neurosci. 20: 331–53. doi:10.1146/annurev.neuro.20.1.331. PMID 9056717.

- ↑ Huber P, Gutbrod K, Ozdoba C, Nirkko A, Lövblad KO, Schroth G (January 2000). "[Aphasia research and speech localization in the brain]". Schweiz Med Wochenschr (in German). 130 (3): 49–59. PMID 10683880.

- ↑ Rorden C, Karnath HO (October 2004). "Using human brain lesions to infer function: a relic from a past era in the fMRI age?". Nat. Rev. Neurosci. 5 (10): 813–9. doi:10.1038/nrn1521. PMID 15378041.

- ↑ BROCA, M. PAUL (1861). "REMARQUES SUR LE SIÉGE DE LA FACULTÉ DU LANGAGE ARTICULÉ, SUIVIES D'UNE OBSERVATION D'APHÉMIE (PERTE DE LA PAROLE)". Bulletin de la Société Anatomique. York University, Toronto, Ontario. 6: 330–357. Retrieved 20 December 2013.

- ↑ Riecker A, Mathiak K, Wildgruber D, et al. (February 2005). "fMRI reveals two distinct cerebral networks subserving speech motor control". Neurology. 64 (4): 700–6. doi:10.1212/01.WNL.0000152156.90779.89. PMID 15728295.

- ↑ Sörös P, Sokoloff LG, Bose A, McIntosh AR, Graham SJ, Stuss DT (August 2006). "Clustered functional MRI of overt speech production". NeuroImage. 32 (1): 376–87. doi:10.1016/j.neuroimage.2006.02.046. PMID 16631384.

Further reading

- Behrman, Alison. (2013). Speech and Voice Science. San diego, Calif.: Plural publishing. ISBN 978-1-59756-481-6. OCLC 836744549.

- Hickok G, Houde J, Rong F (February 2011). "Sensorimotor integration in speech processing: computational basis and neural organization". Neuron. 69 (3): 407–22. doi:10.1016/j.neuron.2011.01.019. PMC 3057382. PMID 21315253.